Advanced Audio Transcription (Speech-to-Text)

SipPulse AI's STT (Speech-to-Text) services convert audio files into text with high accuracy, enabling you to enrich your applications with valuable voice data. Our platform offers:

- High-performance proprietary models:

pulse-precision(focused on maximum accuracy, resulting in excellent Word Error Rate - WER) andpulse-speed(optimized for low latency), both with advanced diarization support (identification of multiple speakers). - Advanced features such as anonymization of sensitive data and Audio Intelligence for structured analysis and automatic insights from transcriptions.

For detailed information on pricing and model specifications, see our Pricing page.

Interactive Playground

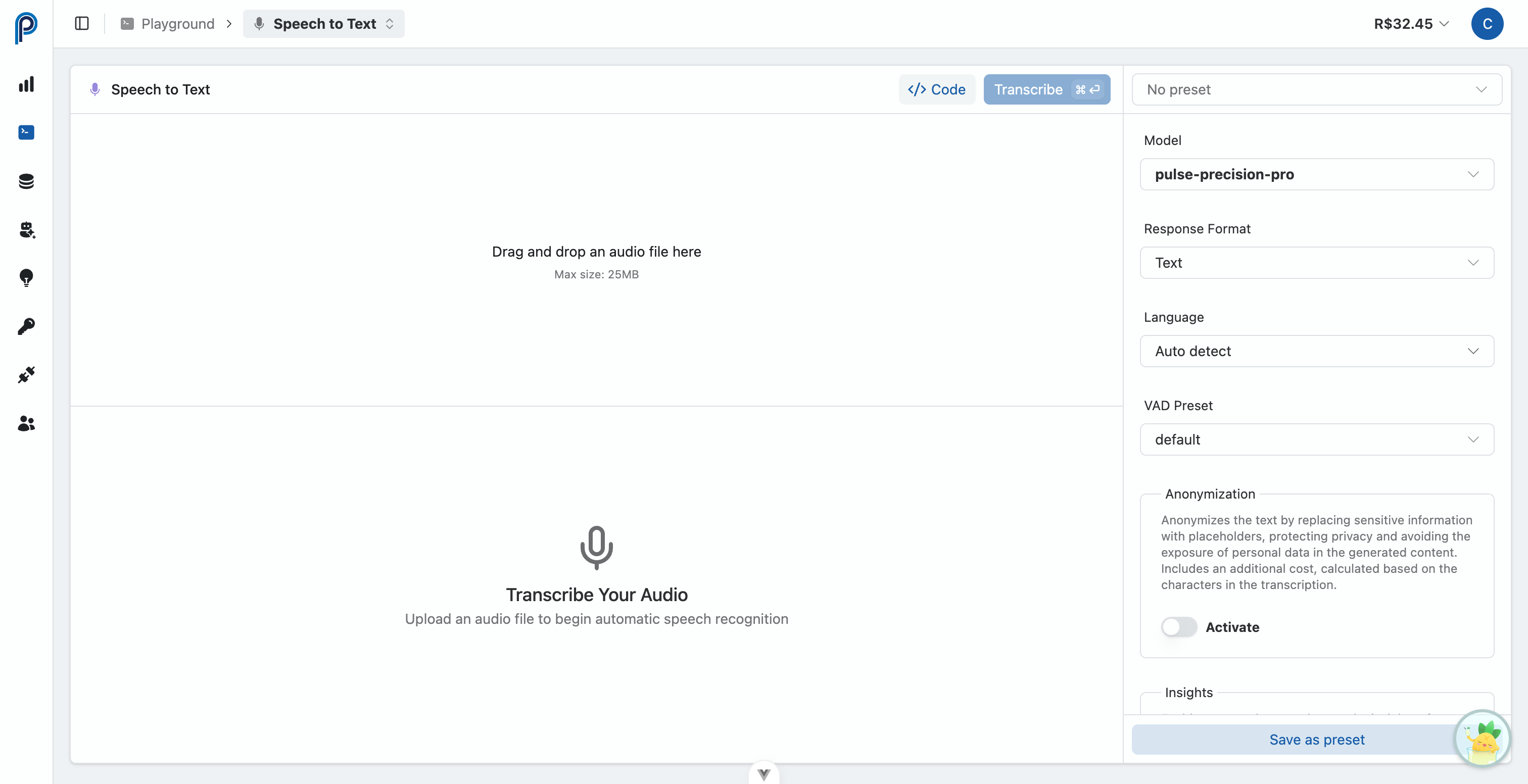

The Interactive Audio Transcription Playground (access here) is the ideal tool to experiment with and validate STT models intuitively:

- Audio File Upload

- Upload audio files (formats: MP3, WAV, PCM, OGG up to 25 MB) by drag-and-drop or using the file selector.

- Transcription Model Selection

- Choose from the available models: -

pulse-precision: Optimized for the highest transcription accuracy. -pulse-speed: Prioritizes processing speed, ideal for use cases requiring lower latency. -pulse-precision-pro: Maximum accuracy with stereo diarization for call center recordings. Includesvad_presetfor optimized voice activity detection and 100% accurate channel-based speaker identification. -whisper-1: OpenAI model. -whisper-chat: OpenAI model with high response speed. Ideal for applications requiring fast responses, even if with a slight reduction in accuracy compared topulse-precision.

- Parameter Configuration

- Output Format (

format): Set the desired transcription format (text,json,vtt,srt,verbose_json,diarization). Note:diarizationis only available forpulse-precisionandpulse-speedmodels. - Language (

language): Specify the audio language (e.g.,pt,en,es). - Instructions (

prompt): Provide instructions or additional context (specific terms, proper names) to guide and refine the transcription process.

- Advanced Features

- Anonymization (

anonymize): Enable to automatically mask sensitive data identified in the transcription (e.g., CPF, email, IP addresses). See the Text Redaction documentation for details. - Audio Intelligence (

insights): Enable to receive structured analyses along with the transcription. Options include summarization, topic identification, sentiment analysis, among others, processed directly at the endpoint.

- Run Transcription

- Click the Transcribe button to process the audio using the selected model and parameters. The transcription result will be displayed in the interface.

- View Integration Code

- The "View Code" feature automatically generates code samples for integration in cURL (Bash), Python, and JavaScript (Node.js). These examples are pre-configured with the same parameters used in the Playground, making it easy to implement transcription functionality in your applications.

Audio Insights

Audio Insights provides automatic analyses of your transcriptions directly in the API response. This feature is ideal for users who need quick, built-in analyses without additional API calls.

Available Insight Types

Text Summarization

Generates a concise summary of the transcription, highlighting the most relevant information.

{

"insights": {

"summarization": true

}

}Topic Detection

Identifies main topics discussed in the transcription. Each topic includes a confidence value (0 to 1) indicating detection accuracy. You can optionally specify topics to detect (max 20).

{

"insights": {

"topic_detection": {

"topics": ["pricing", "support", "billing"]

}

}

}If topics is omitted, the model will automatically detect relevant topics.

Response format:

{

"audio_insights": {

"content": {

"topic_detection": [

{

"label": "pricing",

"confidence": 0.92,

"fragment": "We need to discuss the new pricing model...",

"timestamp": "00:01:23"

}

]

}

}

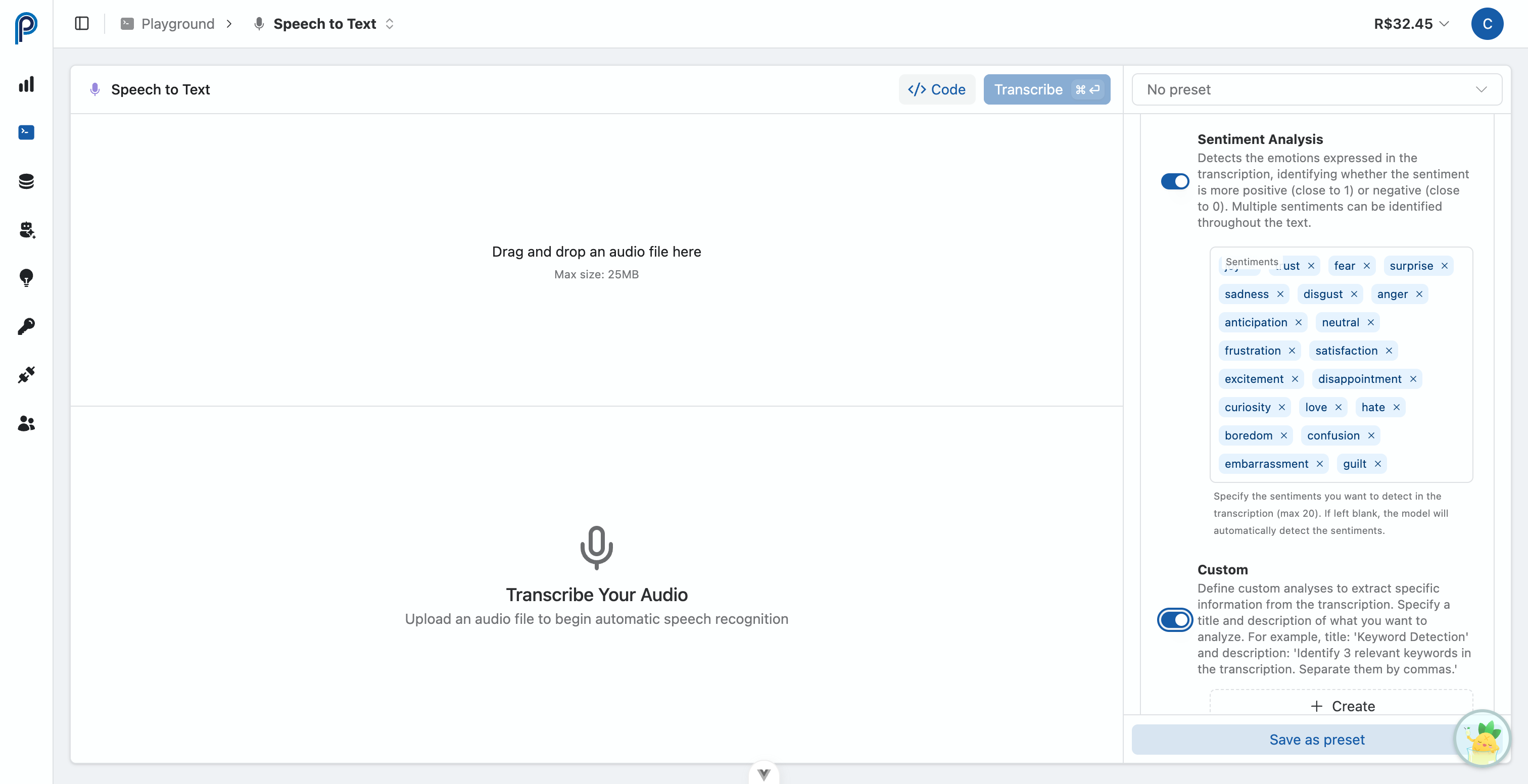

}Sentiment Analysis

Detects emotions expressed in the transcription. You can specify sentiments to detect (max 20) or use defaults.

Default sentiments: joy, trust, fear, surprise, sadness, disgust, anger, anticipation, neutral, frustration, satisfaction, excitement, disappointment, curiosity, love, hate, boredom, confusion, embarrassment, guilt

{

"insights": {

"sentiment_analysis": {

"sentiments": ["satisfaction", "frustration", "neutral"]

}

}

}If sentiments is omitted, all default sentiments will be analyzed.

Response format:

{

"audio_insights": {

"content": {

"sentiment_analysis": [

{

"label": "satisfaction",

"score": 0.85,

"fragment": "I'm really happy with the service...",

"timestamp": "00:02:45"

}

]

}

}

}Custom Analysis

Define your own analyses to extract specific information from the transcription. Each custom analysis requires a title, description, and output type.

{

"insights": {

"custom": [

{

"title": "Keywords",

"description": "Extract 5 main keywords from the conversation",

"type": "string"

},

{

"title": "Call Resolution",

"description": "Was the customer's issue resolved? Answer true or false",

"type": "boolean"

},

{

"title": "Satisfaction Score",

"description": "Rate the customer satisfaction from 1 to 10",

"type": "number"

}

]

}

}Audio Insights vs Structured Analysis

Audio Insights is great for quick, built-in analyses during transcription—ideal for common use cases like summarization and sentiment detection. For more advanced scenarios with custom presets, complex JSON schemas, and reusable templates, use Structured Analysis as a separate API call.

Response Formats

The response_format parameter controls the output structure of the transcription:

| Format | Description | Use Case |

|---|---|---|

text | Plain text transcription | Simple text extraction |

json | JSON object with text field | Programmatic access to text |

verbose_json | JSON with segments, language, and duration metadata | Detailed analysis with timestamps |

srt | SubRip subtitle format | Video subtitles |

vtt | WebVTT subtitle format | Web video captions |

diarization | Speaker-separated transcription with timestamps | Multi-speaker conversations |

stereo_diarization | Speaker-separated transcription using audio channels (L/R) | Stereo call recordings |

Diarization Availability

The diarization format is only available with proprietary models (pulse-precision, pulse-speed). OpenAI models (whisper-1) do not support speaker diarization.

Stereo Diarization

For call center recordings where each speaker is on a separate audio channel (left/right), use the stereo diarization feature with the pulse-precision-pro model.

| Aspect | Standard Diarization | Stereo Diarization |

|---|---|---|

| Speaker identification | AI-based detection | Channel-based (L/R) |

| Accuracy | Good | Perfect (100%) |

| Performance | Normal | Faster |

| Speaker labels | SPEAKER 1, SPEAKER 2... | SPEAKER_L, SPEAKER_R |

Perfect for Call Centers

Stereo diarization provides 100% accurate speaker identification since each audio channel represents one speaker. No AI inference needed—just channel separation.

VAD Preset for Telephony

The pulse-precision-pro model includes optimized Voice Activity Detection (VAD) presets:

| Preset | Use Case | Description |

|---|---|---|

default | General audio | Standard VAD settings for high-quality audio (16kHz+) |

telephony | Phone calls | Optimized for 8kHz narrow-band telephony audio |

When to Use Telephony Preset

Use vad_preset=telephony when transcribing call recordings from PBX systems. This preset is tuned for the specific characteristics of telephone audio, improving accuracy in detecting speech boundaries and reducing false positives from line noise.

For a complete guide with code examples and structured analysis integration, see Stereo Call Transcription.

Using the REST API

Use the native /v1/asr/transcribe endpoint for full control over all features and models, including advanced pulse-precision and pulse-speed with diarization.

# Example: Transcription with diarization, anonymization, and Audio Intelligence (summarization and topics)

# using the pulse-precision model.

curl -X POST 'https://api.sippulse.ai/v1/asr/transcribe' \

-H 'api-key: $SIPPULSE_API_KEY' \

-F 'file=@audio.wav' \

-F 'model=pulse-precision' \

-F 'format=verbose_json' \

-F 'language=pt' \

-F 'diarization=true' \

-F 'anonymize=true' \

-F 'insights=["summarization","topics"]'import os

import requests

import json

def transcribe_audio_advanced(

file_path: str,

model: str = "pulse-precision",

output_format: str = "verbose_json",

language: str = "pt",

enable_diarization: bool = True,

enable_anonymization: bool = True,

audio_insights: list = None

) -> dict:

"""

Transcribes an audio file using the /v1/asr/transcribe endpoint

of SipPulse AI, with support for advanced features.

Args:

file_path: Path to the audio file.

model: Transcription model to use (e.g., "pulse-precision", "pulse-speed").

output_format: Output format for the transcription.

language: Audio language code.

enable_diarization: Enable/disable diarization (speaker separation).

enable_anonymization: Enable/disable sensitive data anonymization.

audio_insights: List of Audio Intelligence analyses to apply (e.g., ["summarization", "topics"]).

"""

api_url = "https://api.sippulse.ai/v1/asr/transcribe"

headers = {"api-key": os.getenv("SIPPULSE_API_KEY")}

with open(file_path, "rb") as audio_file:

files = {"file": audio_file}

payload = {

"model": model,

"format": output_format,

"language": language,

"diarization": str(enable_diarization).lower(),

"anonymize": str(enable_anonymization).lower(),

}

if audio_insights:

payload["insights"] = json.dumps(audio_insights)

response = requests.post(api_url, headers=headers, files=files, data=payload)

response.raise_for_status()

return response.json()

if __name__ == "__main__":

# Replace "audio.wav" with your audio file path

# The SIPPULSE_API_KEY environment variable must be set

try:

transcription_result = transcribe_audio_advanced(

"audio.wav",

model="pulse-precision",

audio_insights=["summarization", "topics"]

)

print(json.dumps(transcription_result, indent=2, ensure_ascii=False))

except FileNotFoundError:

print("Error: Audio file not found. Check the path.")

except requests.exceptions.HTTPError as e:

print(f"API error: {e.response.status_code} - {e.response.text}")

except Exception as e:

print(f"An unexpected error occurred: {e}")// Node.js with node-fetch and form-data

import fs from "fs";

import FormData from "form-data";

import fetch from "node-fetch"; // Make sure node-fetch@2 is installed or use Node 18+ native fetch

async function transcribeAudioAdvanced(

filePath,

model = "pulse-precision",

outputFormat = "verbose_json",

language = "pt",

enableDiarization = true,

enableAnonymization = true,

audioInsights = ["summarization", "topics"]

) {

const apiUrl = "https://api.sippulse.ai/v1/asr/transcribe";

const apiKey = process.env.SIPPULSE_API_KEY;

if (!apiKey) {

throw new Error("The SIPPULSE_API_KEY environment variable is not set.");

}

const form = new FormData();

form.append("file", fs.createReadStream(filePath));

form.append("model", model);

form.append("format", outputFormat);

form.append("language", language);

form.append("diarization", String(enableDiarization).toLowerCase());

form.append("anonymize", String(enableAnonymization).toLowerCase());

if (audioInsights && audioInsights.length > 0) {

form.append("insights", JSON.stringify(audioInsights));

}

const response = await fetch(apiUrl, {

method: "POST",

headers: {

"api-key": apiKey,

// FormData sets Content-Type automatically with the correct boundary

// ...form.getHeaders() // Uncomment if using an older form-data version that requires this

},

body: form,

});

if (!response.ok) {

const errorBody = await response.text();

throw new Error(`API error: ${response.status} ${response.statusText} - ${errorBody}`);

}

return response.json();

}

// Usage example:

// (async () => {

// try {

// // Replace "audio.wav" with your audio file path

// const result = await transcribeAudioAdvanced("audio.wav");

// console.log(JSON.stringify(result, null, 2));

// } catch (error) {

// console.error("Failed to transcribe audio:", error);

// }

// })();Additional Cost Considerations:

- Using Diarization, Anonymization, and Audio Intelligence features may incur additional costs, calculated per token or per processed character. Check your account Dashboard for detailed cost tracking.

Model Listing

To check the STT models currently available for your organization, use the following endpoint:

curl -X GET 'https://api.sippulse.ai/v1/asr/models' \

-H 'api-key: $SIPPULSE_API_KEY'import os

import requests

import json

def list_available_stt_models() -> dict:

"""

Lists the Speech-to-Text (STT) models available in the SipPulse AI API.

"""

api_url = "https://api.sippulse.ai/v1/asr/models"

headers = {"api-key": os.getenv("SIPPULSE_API_KEY")}

response = requests.get(api_url, headers=headers)

response.raise_for_status()

return response.json()

if __name__ == "__main__":

# The SIPPULSE_API_KEY environment variable must be set

try:

models = list_available_stt_models()

print("Available STT models:")

print(json.dumps(models, indent=2))

except requests.exceptions.HTTPError as e:

print(f"API error: {e.response.status_code} - {e.response.text}")

except Exception as e:

print(f"An unexpected error occurred: {e}")// Node.js with node-fetch

// import fetch from "node-fetch"; // If not using Node 18+ with native fetch

async function listAvailableSTTModels() {

const apiUrl = "https://api.sippulse.ai/v1/asr/models";

const apiKey = process.env.SIPPULSE_API_KEY;

if (!apiKey) {

throw new Error("The SIPPULSE_API_KEY environment variable is not set.");

}

const response = await fetch(apiUrl, {

headers: { "api-key": apiKey },

});

if (!response.ok) {

const errorBody = await response.text();

throw new Error(`API error: ${response.status} ${response.statusText} - ${errorBody}`);

}

return response.json();

}

// Usage example:

// (async () => {

// try {

// const models = await listAvailableSTTModels();

// console.log("Available STT models:");

// console.log(JSON.stringify(models, null, 2));

// } catch (error) {

// console.error("Failed to list STT models:", error);

// }

// })();OpenAI SDK

You can use SipPulse AI's transcription models, including proprietary pulse-precision and pulse-speed as well as whisper-1, through the official OpenAI SDK. To do this, set the baseURL parameter to the SipPulse AI endpoint, which is compatible with the OpenAI API for transcriptions.

import OpenAI from "openai";

import fs from "fs"; // For reading the file in Node.js

const sippulseOpenAI = new OpenAI({

apiKey: process.env.SIPPULSE_API_KEY, // Your SipPulse API key

baseURL: "https://api.sippulse.ai/v1/openai", // SipPulse AI compatibility endpoint

});

async function transcribeWithSippulseOpenAI(audioFilePath, modelName = "pulse-precision") {

// In Node.js, provide a File-like object, such as a ReadableStream.

// In the browser, you can use a File object from an <input type="file">.

const audioFileStream = fs.createReadStream(audioFilePath);

try {

console.log(`Starting transcription with model: ${modelName}`);

const response = await sippulseOpenAI.audio.transcriptions.create({

file: audioFileStream, // Can be a Blob, ReadableStream, or File object

model: modelName, // E.g., "pulse-precision", "pulse-speed", or "whisper-1"

response_format: "verbose_json", // Detailed response format

temperature: 0.0, // For more deterministic transcription

// Other parameters supported by the OpenAI transcription API can be added here

});

console.log("Transcription completed:");

console.log(JSON.stringify(response, null, 2));

return response;

} catch (error) {

console.error("Error during transcription with OpenAI SDK:", error);

throw error;

}

}

// Usage example in Node.js:

// (async () => {

// try {

// // Replace "path/to/your/audio.wav" with the actual file path

// await transcribeWithSippulseOpenAI("audio.wav", "pulse-precision");

// // await transcribeWithSippulseOpenAI("audio.wav", "whisper-1");

// } catch (e) {

// // Error already handled inside the function

// }

// })();from openai import OpenAI

import os

# Configure the OpenAI client to use the SipPulse AI endpoint

client = OpenAI(

api_key=os.getenv("SIPPULSE_API_KEY"), # Your SipPulse API key

base_url="https://api.sippulse.ai/v1/openai" # SipPulse AI compatibility endpoint

)

def transcribe_with_sippulse_openai(file_path: str, model_name: str = "pulse-precision"):

"""

Transcribes an audio file using a SipPulse AI model

through the OpenAI-compatible interface.

"""

try:

with open(file_path, "rb") as audio_file:

print(f"Starting transcription with model: {model_name}")

result = client.audio.transcriptions.create(

file=audio_file,

model=model_name, # E.g., "pulse-precision", "pulse-speed", or "whisper-1"

response_format="verbose_json",

temperature=0.0

# Other parameters supported by the OpenAI transcription API can be added here

)

print("Transcription completed.")

return result

except Exception as e:

print(f"Error during transcription with OpenAI SDK: {e}")

raise

if __name__ == "__main__":

# The SIPPULSE_API_KEY environment variable must be set

# Replace "audio.wav" with your audio file path

try:

# Example with pulse-precision

transcription_result = transcribe_with_sippulse_openai("audio.wav", model_name="pulse-precision")

# Example with whisper-1 (if available and desired)

# transcription_result = transcribe_with_sippulse_openai("audio.wav", model_name="whisper-1")

if transcription_result:

# Print the transcription result in a readable format

import json

print(json.dumps(transcription_result.dict(), indent=2, ensure_ascii=False))

except FileNotFoundError:

print(f"Error: Audio file 'audio.wav' not found.")

except Exception:

# Error already handled and printed by the function

passImportant Limitation:

- When using the OpenAI SDK (even with

baseURLpointing to SipPulse AI), advanced and proprietary SipPulse AI features such asdiarization,anonymize, orinsightsare not supported. These parameters are specific to the native SipPulse AI REST API (/v1/asr/transcribe). To access all features, use the native REST API directly.

Best Practices

To achieve the best results and optimize the use of STT models:

- Audio Quality: Provide audio with the highest possible clarity, minimizing background noise and ensuring good voice capture.

- Splitting Long Audio Files: For long audio files (e.g., >60 minutes), consider splitting them into smaller segments before submitting for transcription. This can improve performance and process management.

- Strategic Use of Diarization: In recordings of dialogues, meetings, or any scenario with multiple speakers, use the diarization feature (available in

pulse-precisionandpulse-speedmodels). Correct identification and separation of speakers significantly enriches analysis and usability of the transcription. - Conscious Anonymization: Enable anonymization whenever the transcription may contain sensitive personal data. Be aware of the additional costs associated with this processing.

- Audio Intelligence for Immediate Insights: Combine transcription with Audio Intelligence features (e.g., summarization, topic identification, sentiment analysis) to extract value and insights automatically and immediately, directly in the API response.

- Cost Monitoring: Regularly monitor consumption and costs associated with transcriptions and advanced features through your SipPulse AI account Dashboard.

Frequently Asked Questions (FAQ)

What is the main difference between the pulse-precision and pulse-speed models?

pulse-precision: Optimized for maximum transcription accuracy, making it the ideal choice when text accuracy is the most critical factor. This model tends to have a lower Word Error Rate (WER), but may have slightly higher latency. pulse-speed: Prioritizes processing speed and lower latency, suitable for applications requiring faster responses, even if this means slightly lower accuracy compared to pulse-precision.

Is it possible to use the diarization feature with the whisper-1 model via SipPulse AI?

No. The diarization feature is an advanced and exclusive feature of SipPulse AI's proprietary models: pulse-precision and pulse-speed. These models are specifically designed to provide detailed analysis of audio with multiple speakers.

How can I get structured analyses, such as summarization or topics, from my transcription?

To receive structured analyses, use the insights parameter when making a request to the native SipPulse AI REST API endpoint (/v1/asr/transcribe). Specify a list with the desired analyses (e.g., ["summarization", "topics"]). The results of these analyses will be included in the audio_insights object in the JSON response. The "View Code" feature in the SipPulse AI Playground can generate examples of how to correctly format this parameter in your request.

What is the average processing time for a transcription?

Processing time may vary, but generally corresponds to a few seconds per minute of audio. Factors such as the selected model (pulse-speed tends to be faster), audio duration and complexity, and current system load can influence the total time.