SipPulse AI - Testing Voice Agents

Importance

Thoroughly testing voice agents is fundamental to ensuring successful deployments in real-world environments. This chapter describes the methodology and best practices for comprehensive voice agent testing.

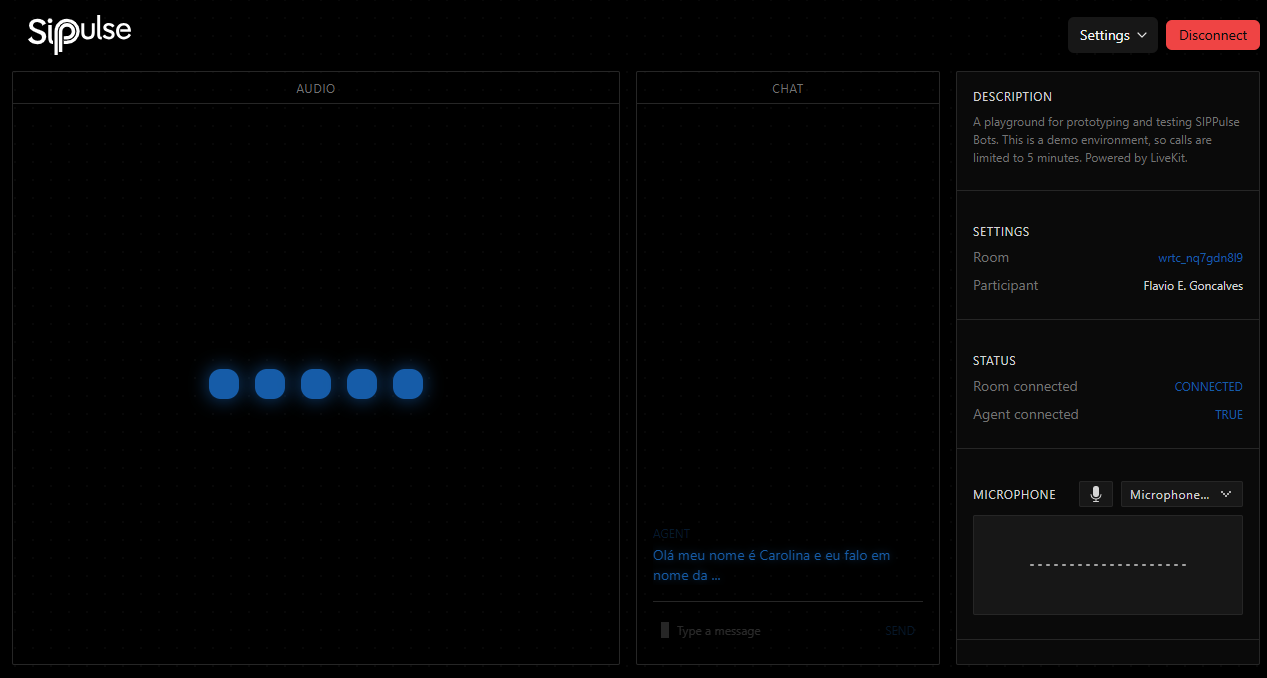

Voice Testing Playground Interface

The SipPulse AI platform offers a dedicated playground for evaluating agents in a realistic environment:

Interface Components

Main Sections:

- AUDIO: Displays audio visualization during voice interactions

- CHAT: Shows real-time transcription of the conversation

- SETTINGS/INFORMATION: Provides configuration options and status information

Key Interface Elements:

- Header Controls: Settings menu and Disconnect button

- Audio Visualization: Blue waveform indicators showing voice activity

- Chat Window: Text transcription of user and agent speech

- Room Information: Unique WebRTC room identifier (ex: wrtc_nq7gdh8i9)

- Status Indicators: Connection status for room and agent

- Microphone Settings: Audio input device selection and level monitoring

Testing Limitations:

- As indicated in the description: "A playground for prototyping and testing SipPulse Bots. This is a demo environment, so calls are limited to 5 minutes"

Best Practices for Voice Agent Design

Guidelines for Handling Critical Data

Avoid Collecting Critical Data by Voice:

- Important: DO NOT use voice recognition to collect names or email addresses

- Voice recognition consistently underperforms with names across all platforms due to pronunciation variations

- Email addresses have even higher error rates, especially with domain names

- Instead of trying to collect this information by voice, direct users to alternative channels:

- Offer to send an SMS link for registration

- Suggest visiting a website to complete information

- Provide options to interact with a human representative

Conversational Design Principles:

- Keep prompts brief: Use short, clear phrases to maintain conversation pace

- Limit options: Present no more than 3-4 choices at once to avoid cognitive overload

- Progressive disclosure: Reveal information gradually instead of all at once

- Use simple selection options: "Would you like to speak with the sales department?"

Implementation Recommendations

Pre-Deployment Checklist:

- Test with at least 10-15 diverse speakers for each critical interaction flow

- Verify that complex information collection has alternative pathways

- Ensure fallback mechanisms work correctly when recognition fails

- Test with various background noise profiles

Prompt Design Guidelines:

- Prioritize important information at the beginning

- Use active voice and conversational language

- Avoid jargon, technical terms, or complex sentence structures

- Design for simple Yes/No or number-based inputs when possible

Alternative Data Collection Strategies:

- Direct users to web forms for complex data entry

- Implement omnichannel solutions (start in voice, continue in text)

- Use DTMF (touch-tone) input for account numbers or other critical information

- For authentication, consider using phone number recognition instead of voice identity

Performance Monitoring:

- Track recognition failure rates by prompt type

- Measure conversation duration and completion rates

- Identify and optimize high-failure interactions

- Continuously refine based on real-world performance data

These best practices highlight the importance of understanding fundamental limitations of voice interfaces. By avoiding problematic use cases like name and email collection, and implementing appropriate alternatives, SipPulse AI voice agents can deliver significantly improved accuracy, efficiency, and user satisfaction.

3.2 Voice Agent Testing Methodology

Setting Up Testing Sessions

Starting a Voice Test:

- Access the voice testing playground through the agents dashboard

- Ensure proper microphone setup and audio device selection

- Verify that agent connection status shows "TRUE" before starting the test

Audio Setup Best Practices:

- Test in a quiet environment initially, then gradually introduce background noise

- Use various microphone types to assess speech recognition performance

- Test with different speech volumes and distances from the microphone

Testing Specific Voice Functions

Speech Recognition Accuracy:

- Test pronunciation of company names, products, and technical terms

- Validate recognition of different accents and speech patterns

- Verify handling of speech disfluencies (um, uh, stuttering, restarts)

Voice Interaction Flow:

- Test interruptions during agent speech

- Evaluate silence handling and timeout responses

- Analyze turn-taking dynamics and conversation pacing

Audio Quality Assessment:

- Evaluate text-to-speech clarity and naturalness

- Test appropriate pauses and intonation

- Verify pronunciation of specialized terminology

3.3 Common Testing Scenarios for Voice Agents

Basic Voice Interactions:

- Agent introduction and welcome message

- Simple question-and-answer exchanges

- Voice command execution (ex: "transfer to support") Only for SIP, this cannot be tested in the playground

Complex Voice Scenarios:

- Multi-turn conversations requiring context maintenance

- Information gathering through sequential questions

- Error correction and clarification requests

Edge Cases:

- Background noise interference

- Multiple speakers talking simultaneously

- Very short or very long speech

- Heavily accented speech

3.4 Analyzing Test Results

Real-Time Analysis:

- Monitor transcription accuracy in the chat window

- Observe response latency after speech input

- Evaluate appropriateness of agent responses

Post-Test Review:

- Examine complete conversation transcriptions

- Analyze where communication failures or errors occurred

- Identify opportunities for prompt or model improvements

Iterative Refinement:

- Make incremental adjustments to agent configuration

- Retest specific failure scenarios

- Conduct A/B testing between different agent versions

By effectively using the SipPulse voice testing playground, development teams can identify and resolve issues before deploying voice agents in production environments, ensuring higher quality interactions and better user experiences when handling real customer calls.