Post Analysis

Post Analysis automatically processes conversations after they end, extracting structured insights, summaries, and metrics. This powerful feature turns raw conversations into actionable business data without manual review.

What is Post Analysis?

When a conversation ends, Post Analysis runs a separate AI analysis pass that can:

| Capability | Example Output |

|---|---|

| Summarize conversations | "Customer inquired about billing, resolved by explaining payment due date" |

| Extract structured data | { "topic": "billing", "resolved": true, "sentiment": "satisfied" } |

| Score interactions | Customer satisfaction: 4/5, Agent performance: 92% |

| Classify intents | Primary: "billing_inquiry", Secondary: "plan_upgrade_interest" |

| Flag follow-ups | "Customer requested callback about enterprise pricing" |

Additional Token Cost

Post Analysis runs a separate LLM call after each conversation, which incurs additional token costs. The cost depends on conversation length and analysis complexity.

How It Works

Conversation Ends

↓

Post Analysis Triggered

↓

┌─────────────────────────────────────┐

│ LLM analyzes full conversation: │

│ - Applies your analysis prompts │

│ - Extracts structured data │

│ - Generates summaries │

└─────────────────────────────────────┘

↓

Results stored and/or sent via webhookTrigger timing:

- Voice calls: When call ends

- Chat: When thread is marked complete or times out

- API threads: When explicitly closed

Configuration

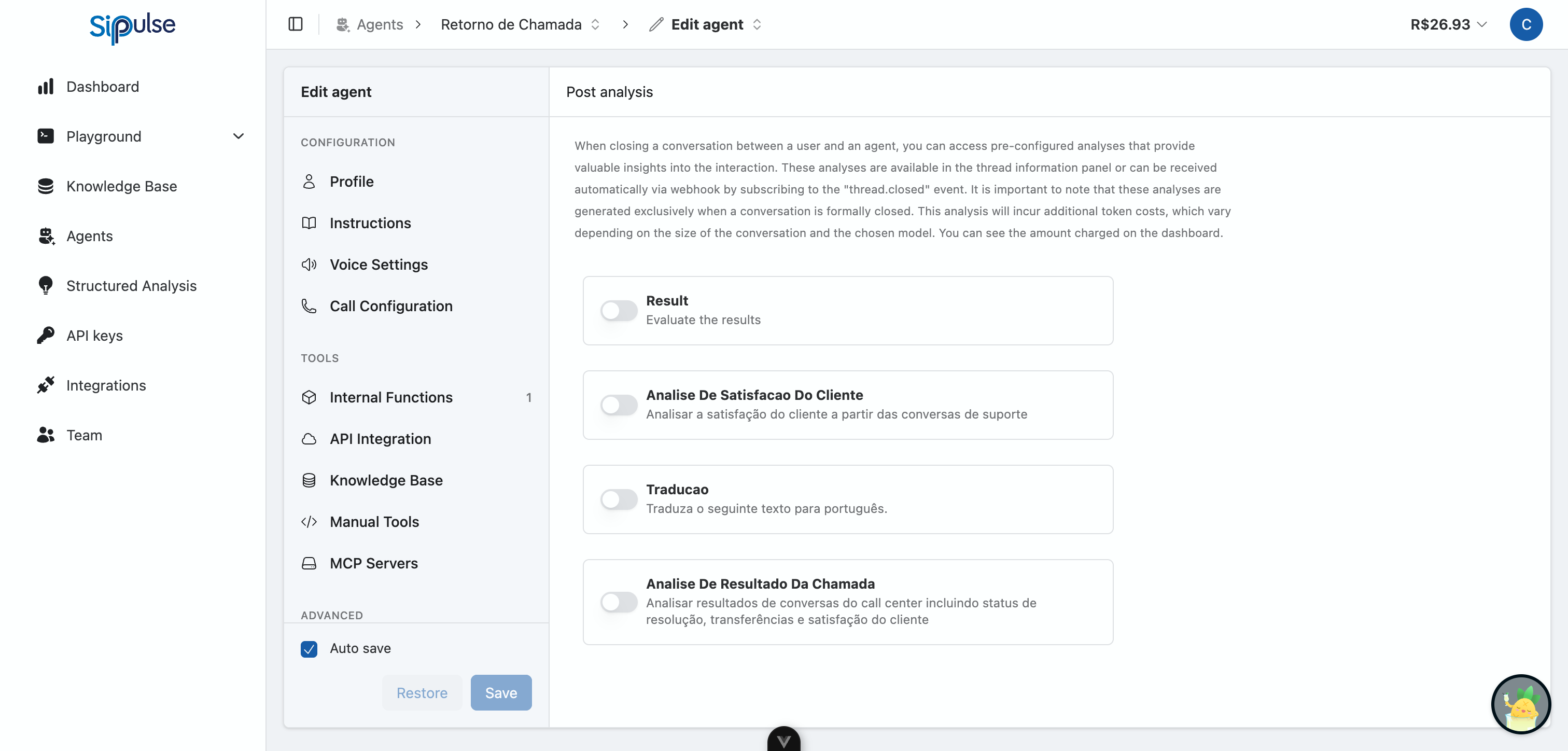

Enabling Post Analysis

- Navigate to Agent Configuration > Advanced

- Find Post Analysis section

- Toggle Enable Post Analysis

- Configure your analysis schemas

Analysis Schema

Define what data to extract using JSON Schema:

{

"type": "object",

"properties": {

"summary": {

"type": "string",

"description": "2-3 sentence summary of the conversation"

},

"topic": {

"type": "string",

"enum": ["billing", "technical_support", "sales", "general", "complaint"],

"description": "Primary topic of the conversation"

},

"resolved": {

"type": "boolean",

"description": "Whether the customer's issue was resolved"

},

"sentiment": {

"type": "string",

"enum": ["positive", "neutral", "negative"],

"description": "Customer's overall sentiment"

},

"follow_up_needed": {

"type": "boolean",

"description": "Whether follow-up action is required"

},

"follow_up_notes": {

"type": "string",

"description": "Details about required follow-up, if any"

}

},

"required": ["summary", "topic", "resolved", "sentiment"]

}Analysis Prompt

Customize instructions for the analysis:

Analyze this customer support conversation and extract the following:

1. **Summary**: Provide a brief, factual summary of what was discussed and the outcome.

2. **Topic Classification**: Categorize into one of these topics:

- billing: Payment, invoices, charges, refunds

- technical_support: Product issues, bugs, how-to questions

- sales: Pricing, plans, upgrades, new purchases

- general: General inquiries, account management

- complaint: Dissatisfaction, escalation requests

3. **Resolution**: Was the customer's primary question/issue resolved in this conversation?

4. **Sentiment**: Based on the customer's language and responses, gauge their overall sentiment.

5. **Follow-up**: Note if any action items or callbacks were promised.

Be objective and base your analysis on the actual conversation content.Example Schemas

Customer Support Analysis

{

"type": "object",

"properties": {

"summary": {

"type": "string",

"description": "Concise summary of the support interaction"

},

"issue_category": {

"type": "string",

"enum": ["account_access", "billing", "product_bug", "feature_request", "how_to", "other"]

},

"resolution_status": {

"type": "string",

"enum": ["resolved", "escalated", "pending", "unresolved"]

},

"customer_effort_score": {

"type": "integer",

"minimum": 1,

"maximum": 5,

"description": "Estimated effort customer had to exert (1=easy, 5=difficult)"

},

"escalation_reason": {

"type": "string",

"description": "If escalated, explain why"

}

}

}Sales Qualification Analysis

{

"type": "object",

"properties": {

"lead_quality": {

"type": "string",

"enum": ["hot", "warm", "cold", "unqualified"],

"description": "Lead qualification score"

},

"budget_mentioned": {

"type": "boolean"

},

"budget_range": {

"type": "string",

"description": "Estimated budget if mentioned"

},

"timeline": {

"type": "string",

"description": "Purchase timeline if discussed"

},

"decision_maker": {

"type": "boolean",

"description": "Is the contact a decision maker?"

},

"competitor_mentioned": {

"type": "array",

"items": { "type": "string" },

"description": "Any competitors mentioned"

},

"next_step": {

"type": "string",

"description": "Recommended next action"

}

}

}Quality Assurance Analysis

{

"type": "object",

"properties": {

"greeting_proper": {

"type": "boolean",

"description": "Did the agent properly greet the customer?"

},

"identification_verified": {

"type": "boolean",

"description": "Was customer identity verified when required?"

},

"accurate_information": {

"type": "boolean",

"description": "Was information provided accurate?"

},

"professional_tone": {

"type": "boolean",

"description": "Did agent maintain professional tone throughout?"

},

"closing_proper": {

"type": "boolean",

"description": "Did agent properly close the conversation?"

},

"compliance_issues": {

"type": "array",

"items": { "type": "string" },

"description": "Any compliance concerns noted"

},

"overall_score": {

"type": "integer",

"minimum": 0,

"maximum": 100

}

}

}Accessing Analysis Results

In the Platform

- Navigate to Conversations or Thread History

- Select a completed conversation

- View the Analysis tab to see extracted data

Via Webhook

Configure a webhook to receive analysis results automatically:

{

"event": "conversation.analyzed",

"conversation_id": "conv_abc123",

"agent_id": "agt_xyz",

"timestamp": "2025-01-18T15:30:00Z",

"analysis": {

"summary": "Customer called about a billing discrepancy...",

"topic": "billing",

"resolved": true,

"sentiment": "positive",

"follow_up_needed": false

}

}See Webhooks for configuration details.

Via API

Retrieve analysis for a specific conversation:

GET /api/conversations/{conversation_id}/analysis

Authorization: Bearer {api_key}Best Practices

1. Keep Schemas Focused

Don't try to extract everything. Focus on data you'll actually use:

❌ Over-engineered:

{

"properties": {

"summary": {},

"detailed_summary": {},

"brief_summary": {},

"executive_summary": {},

// ... 30 more fields

}

}✅ Focused:

{

"properties": {

"summary": {},

"topic": {},

"resolved": {},

"follow_up": {}

}

}2. Use Enums for Consistency

Structured values make reporting easier:

{

"sentiment": {

"type": "string",

"enum": ["positive", "neutral", "negative"]

}

}This ensures you get consistent values rather than variations like "happy", "satisfied", "good", "positive".

3. Write Clear Descriptions

The description field guides the analysis:

{

"customer_effort_score": {

"type": "integer",

"minimum": 1,

"maximum": 5,

"description": "Rate how much effort the customer had to exert: 1=issue resolved immediately, 5=customer had to repeat themselves multiple times or got transferred"

}

}4. Test with Real Conversations

Before deploying, test your schema against actual conversation transcripts to verify:

- Fields are extractable from typical conversations

- Categories/enums cover common cases

- Analysis prompt produces accurate results

5. Consider Cost vs. Value

Post Analysis uses additional tokens. Balance:

- Analyze all conversations for high-value insights

- Sample conversations for quality monitoring

- Skip analysis for very short interactions

Use Cases

Customer Success Dashboard

Track resolution rates, sentiment trends, and common issues:

| Metric | Source Field |

|---|---|

| Resolution Rate | resolved (boolean) |

| Sentiment Trend | sentiment (enum) |

| Top Issues | topic (enum) count |

| Follow-up Queue | follow_up_needed filter |

Sales Pipeline Integration

Push qualified leads to your CRM:

// Webhook handler example

if (analysis.lead_quality === 'hot' && analysis.decision_maker) {

await crm.createOpportunity({

contact: conversation.customer,

source: 'AI Agent',

budget: analysis.budget_range,

timeline: analysis.timeline,

notes: analysis.summary

});

}Quality Monitoring

Automatically flag conversations for review:

// Alert on low quality scores

if (analysis.overall_score < 70 || analysis.compliance_issues.length > 0) {

await alertTeam('QA Review Needed', conversation.id);

}Troubleshooting

Analysis Not Running

- Verify Post Analysis is enabled

- Check that conversations are being properly closed

- Review schema for syntax errors

Incorrect Extractions

- Improve description fields in schema

- Add examples to your analysis prompt

- Ensure enum values cover all cases

- Test with representative conversation samples

High Costs

- Reduce schema complexity

- Use shorter analysis prompts

- Consider sampling instead of analyzing all conversations

- Use smaller models for analysis if available

Related Documentation

- Webhooks - Receive analysis results automatically

- Structured Analysis - Broader structured output capabilities

- Agent Configuration - Complete agent setup